Job Opening for Senior Data Engineer / Lead Data Engineer – Reference from AEM Skills

Unlock Your Potential with Senior and Lead Data Engineer Roles in Bangalore

Are you ready to advance your career in data engineering? We’re hiring Senior Data Engineers and Lead Data Engineers for exciting opportunities in Bangalore. If you’re passionate about working with cutting-edge technologies, have expertise in Python, SQL, Adobe RTCDP, and cloud platforms like AWS, Azure, or GCP, this could be the role for you!

🛠️ About the Job

We are looking for seasoned professionals with a proven track record in data engineering, eager to solve complex challenges in a fast-paced environment.

Position Details:

1. Senior Data Engineer

- Experience Required: 5 to 9 years

- Skills Required: Python, SQL, Adobe RTCDP (Real-Time CDP), AWS/Azure/GCP

2. Lead Data Engineer

- Experience Required: 8 to 13 years

- Skills Required: Python, SQL, Adobe RTCDP (Real-Time CDP), AWS/Azure/GCP

📍 Location: Bangalore

- Final Round: In-person (Face-to-Face) interview in Bangalore. Only apply if you’re available for F2F interviews.

💼 Key Responsibilities

- Build and optimize data pipelines, ensuring efficient handling of both structured and unstructured data.

- Develop robust data models and data catalogs, enabling seamless data management and analytics.

- Design scalable solutions using cloud platforms (AWS, Azure, GCP) and implement advanced data engineering practices.

- Work extensively with Adobe Real-Time Customer Data Platform (RTCDP) for customer data management.

- Collaborate with cross-functional teams in an Agile environment to deliver data-driven solutions.

- Write and optimize complex SQL queries and scripts to enable insightful analytics.

- Utilize programming knowledge (e.g., JavaScript) for automating workflows and enhancing platform capabilities.

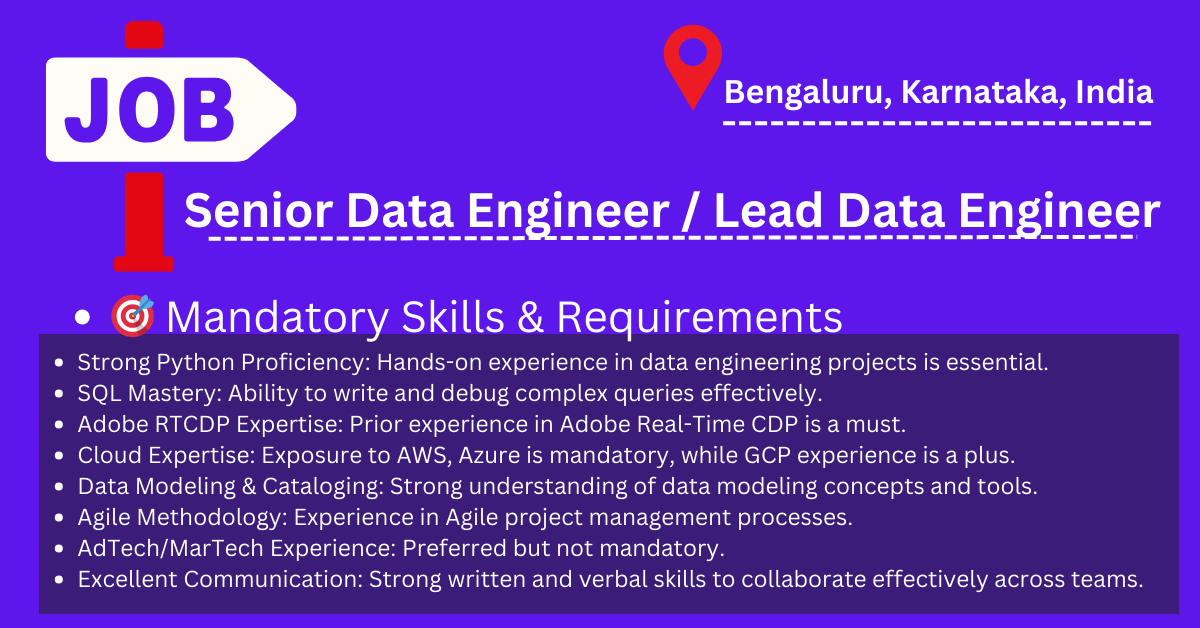

🎯 Mandatory Skills & Requirements

- Strong Python Proficiency: Hands-on experience in data engineering projects is essential.

- SQL Mastery: Ability to write and debug complex queries effectively.

- Adobe RTCDP Expertise: Prior experience in Adobe Real-Time CDP is a must.

- Cloud Expertise: Exposure to AWS, Azure is mandatory, while GCP experience is a plus.

- Data Modeling & Cataloging: Strong understanding of data modeling concepts and tools.

- Agile Methodology: Experience in Agile project management processes.

- AdTech/MarTech Experience: Preferred but not mandatory.

- Excellent Communication: Strong written and verbal skills to collaborate effectively across teams.

🚀 Why Join?

- Work with cutting-edge tools and technologies in the rapidly evolving data engineering landscape.

- Join a high-performing team dedicated to creating impactful data solutions.

- Contribute to innovative projects in domains like AdTech and MarTech.

- Competitive compensation and growth opportunities tailored to your expertise.

- Be part of an Agile, collaborative, and dynamic work environment.

📢 Who Should Apply?

This role is ideal for data professionals with a passion for innovation and problem-solving. If you meet the criteria, have relevant experience, and are available for an in-person interview in Bangalore, we encourage you to apply!

📌 How to Apply?

Send your updated resume along with a brief note about your experience with Adobe RTCDP and cloud platforms to contact@aemskills.com for getting apply link and reference for this job in next 7 days.

💡 Boost Your Career with Us

Join us and take the next step in your data engineering journey. Whether you’re a Senior Data Engineer with 5+ years of experience or a Lead Data Engineer with 8+ years, this is your opportunity to shine.

Caution: We Do Not Charge Any Fees for Job Referrals

We strongly advise all applicants to stay vigilant against fraudulent activities. We do not charge any fees or encourage money transactions for job referrals or placement opportunities. If anyone demands payment claiming to represent us, please report it immediately. Your safety and trust are our priority!

Cybersecurity Architect | Cloud-Native Defense | AI/ML Security | DevSecOps

With over 23 years of experience in cybersecurity, I specialize in building resilient, zero-trust digital ecosystems across multi-cloud (AWS, Azure, GCP) and Kubernetes (EKS, AKS, GKE) environments. My journey began in network security—firewalls, IDS/IPS—and expanded into Linux/Windows hardening, IAM, and DevSecOps automation using Terraform, GitLab CI/CD, and policy-as-code tools like OPA and Checkov.

Today, my focus is on securing AI/ML adoption through MLSecOps, protecting models from adversarial attacks with tools like Robust Intelligence and Microsoft Counterfit. I integrate AISecOps for threat detection (Darktrace, Microsoft Security Copilot) and automate incident response with forensics-driven workflows (Elastic SIEM, TheHive).

Whether it’s hardening cloud-native stacks, embedding security into CI/CD pipelines, or safeguarding AI systems, I bridge the gap between security and innovation—ensuring defense scales with speed.

Let’s connect and discuss the future of secure, intelligent infrastructure.